Memory Management

Back to main page

Emanuele Altieri (ealtieri@cs.smith.edu)

Prof. Nicholas Howe (nhowe@cs.smith.edu)

Smith College, June 2002

Contents

- Keeping track of pages

- Allocating pages

- Parsing a free area list

- Page faults

Questions:

Q1,

Q2,

Q3,

Q4,

Q5,

Q6,

Q7.

Keeping track of pages

On Intel processors, Linux uses a page size of 4 KB (4096

bytes). This means that the address of the first byte of a

page frame is always a multiple of 0x1000 (4096).

|

Q1. Assuming a page size of 4096 bytes (4 KB),

indicate which of the following physical addresses mark

the beginning of a page: 0x0, 0x5F001, 0x7A0B00,

0x9D000, 0x860000. Indicate the page frame to

which the following addresses belong: 0x178A67,

0x10101, 0x8FF000.

Using the shift

right C operator (>>), give a C expression that quickly

calculates the page frame number of a given

address. |

The kernel keeps track of the current status of each page

frame in memory using an array of page

structures. Each element of this array corresponds to a page

frame in physical memory. The array is defined as following:

struct page *mem_map;

For example, mem_map[0] contains information

about the first page frame in memory (address range 0x0 -

0xFFF). The exact location in memory of the

mem_map array depends on the size of the

kernel. The total number of page frames in memory is given by

max_mapnr.

A page structure is defined in include/linux/mm.h,

line 151, as following:

struct page {

struct list_head list; /* ->mapping has some page lists. */

struct address_space *mapping; /* The inode (or ...) we belong to. */

unsigned long index; /* Our offset within mapping. */

struct page *next_hash; /* Next page sharing our hash bucket in

the pagecache hash table. */

atomic_t count; /* Usage count, see below. */

unsigned long flags; /* atomic flags, some possibly

updated asynchronously */

struct list_head lru; /* Pageout list, eg. active_list;

protected by pagemap_lru_lock !! */

wait_queue_head_t wait; /* Page locked? Stand in line... */

struct page **pprev_hash; /* Complement to *next_hash. */

struct buffer_head * buffers; /* Buffer maps us to a disk block. */

void *virtual; /* Kernel virtual address (NULL if

not kmapped, ie. highmem) */

struct zone_struct *zone; /* Memory zone we are in. */

}

In this document we will only consider the fields in bold.

- The

flags field determines the properties of

a page. Flags are described in mm.h, line

275. For example, the PG_reserved flag indicates

that a page is reserved for kernel use or for some other

special purpose and cannot be touched.

- The

count field indicates if the page is free

or being used. If count is zero, the page is free, otherwise

the page is in use.

- The

zone field indicates the memory zone to

which the page belongs. Zones are described below.

On Linux 2.4.18, physical memory is divided into

zones. Each zone contains a fixed number of

continuous pages having some specific property. On i386

machines there are only three possible memory zones:

ZONE_DMA (4096 pages), contains pages

suitable for DMA (Direct Memory Access)

operations. Because of a limitation in ISA DMA controllers,

only the first 16MB of physical memory can be used for direct

memory access. Therefore, ZONE_DMA contains 4096

pages (4096*4KB=16MB) identified by memmap[0]

through memmap[4095].ZONE_NORMAL contains pages available

for normal (kernel and user) use. The size of this zone

depends on the amount of RAM on your computer. For example, on

a machine with 512 MB of RAM, the number of pages in

ZONE_NORMAL will be

NormalPages = (TotalMemory - DMAMemory) / PageSize =

= (512 MB - 16 MB) / 4 KB =

= 496 MB / 4 KB =

= 507,904 KB / 4 KB =

= 126,976 pages

ranging from memmap[4096] to memmap[131071].

ZONE_HIGHMEM, generally not used; it

contains 0 pages.

Upon allocation request, the buddy system algorithm attempts

to allocate pages within a goal zone, where the goal

zone depends on the properties of the requested pages. If this

zone fails, the other zones are also considered. The buddy

system will be explained later.

|

Q2. Given a page size of 4096 bytes (4 KB), calculate

the number of pages belonging to the normal zone

(ZONE_NORMAL) if the total amount of memory

is 128 and 256 MB. Also calculate the page index range in

memmap[] for each case. |

A zone data structure is defined in include/linux/mmzone.h, line

36, as following:

struct zone_struct {

/*

* Commonly accessed fields:

*/

spinlock_t lock;

unsigned long free_pages;

unsigned long pages_min, pages_low, pages_high;

int need_balance;

/*

* free areas of different sizes

*/

free_area_t free_area[MAX_ORDER];

/*

* Discontig memory support fields.

*/

struct pglist_data *zone_pgdat;

struct page *zone_mem_map;

unsigned long zone_start_paddr;

unsigned long zone_start_mapnr;

/*

* rarely used fields:

*/

char *name; /* "DMA", "Normal" or "HighMem" */

unsigned long size; /* size in pages */

};

Allocating pages

The function in charge of allocating pages is

__alloc_pages(), implemented in kernel/mm/page_alloc.c,

line 311:

struct page * __alloc_pages(unsigned int gfp_mask, unsigned int order, zonelist_t *zonelist)

The function attempts to allocate 2order

pages. The number of pages allocated is always a power of two,

in order to reduce external fragmentation.

The gfp_mask argument specifies the priority of

the request and use of the page. This parameter can be one the

GFP_xxx symbols, defined in

include/linux/mm.h, line 539. A high priority

request (e.g. GFP_ATOM) will cause the kernel to

do everything possible to satisfy the request immediately,

including swapping out used pages if no free pages are

available. On the other side, a low priority, such as

GFP_USER, could put the current process to sleep

if no free pages are available.

The zonelist argument contains a list of memory

zones where the algorithm will look for free pages. The

function will try to allocate the requested pages in the first

zone of the list, the goal zone. If this fails, the

other zones in the list are considered. In this document we

will always assume ZONE_NORMAL to be the goal

zone.

When looking for free pages in a given zone, the function

examines the zone's free_area[] array. Each

element free_area[K] of this array contains a

pointer to a list of areas of 2K free continuous

pages in the memory zone. K is called the

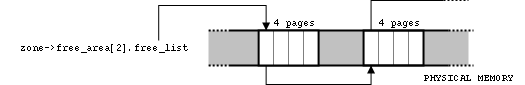

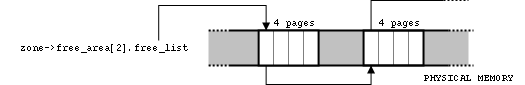

order of the free area list. For example:

zone->free_area[2].free_list

is a list of free areas of 4 (22) pages.

A list of free areas of 4 pages.

If we go back to the __alloc_pages() function, we

can now see that to satisfy a request of 2order

pages the function must look for a free area in the

zone->free_area[order].free_list list. If a free

area of such order cannot be found, the function looks in the

free area list of the next order.

The algorithm can be summarized as following:

struct list_head *head, *curr;

struct page *page;

do {

head = &zone->free_area[order].free_list; /* the free area list */

curr = list_next(head); /* first element in the list */

if (curr != head) { /* check if the free area list contains elements */

page = list_entry(curr, struct page, list);

list_del(curr); /* remove area from the free list */

/* ... */

return(page);

}

/* the free area list of this order is empty,

try the list of the following order */

order++;

} while(order < MAX_ORDER);

/* cannot find an appropriate area in this zone */

return(NULL);

|

Q3. The free_area[4].free_list list of a

zone contains 13 areas. What is the size (in pages) of a

single area? What is the total number of pages referenced

by this list? |

|

Q4. What is the maximum size (in pages) of a free area

supported by the Linux kernel? |

Parsing a free area list

The following code can be used to parse a free area list of

order order in the ZONE_NORMAL zone:

struct list_head *head, *curr;

struct zone_struct *zone;

...

zone = &contig_page_data.node_zones[ZONE_NORMAL]; /* ZONE_NORMAL */

head = &zone->free_area[order].free_list; /* head of the list */

list_for_each(curr, head) {

struct page *page = list_entry(curr, struct page, list);

/* "page" is now the first page of the current area */

...

}

The list_for_each() macro expands to a

for loop which goes through every element of the

list. In each iteration, the current position in the list is

represented by the curr pointer. To retrieve a

page structure from this element, we need to use

the list_entry() macro.

|

Q5. To which zone does page 4096 belong to? What

about page 2304? (You may want to review the Keeping track of pages section of this

document) |

|

Q6. pageinfo-skel.c is

a kernel module that creates a /proc entry

called pageinfo. Reading from

/proc/pageinfo will output general

information about memory and the normal zone

(ZONE_NORMAL). Extend the

pageinfo_proc_read() function in this module

so that it also outputs the number of areas in

each of the zone's free area lists (use the code above as

a reference). Also print the total number of pages

referenced by each list. The final output should be

something similar to this:

[ealtieri@italia os]$ cat /proc/pageinfo

...

FREE AREA LISTS:

order 0: 0 areas (0 pages)

order 1: 72 areas (144 pages)

order 2: 55 areas (220 pages)

order 3: 31 areas (248 pages)

order 4: 17 areas (272 pages)

order 5: 4 areas (128 pages)

order 6: 3 areas (192 pages)

order 7: 2 areas (256 pages)

order 8: 0 areas (0 pages)

order 9: 3 areas (1536 pages)

In order to compile the device driver, you will need to

add the line

EXPORT_SYMBOL(contig_page_data); to

kernel/ksyms.c and recompile the kernel

using "make clean dep bzImage". |

Counting page faults

Unfortunately, the kernel does not have a built-in page fault

counter. In order to keep track of the number of page faults

on the system, we need to implement our own counter. This can

be done with a few simple modifications to the page fault

handler, do_page_fault(), in arch/i386/mm/fault.c, line

150.

We declare a counter page_faults to keep track of

the number of page faults. The counter must be initialized to

0 and incremented at every page fault. The right place to

increment page_faults is at the beginning of

do_page_fault(), as shown below in bold:

/* in arch/i386/mm/fault.c */

...

unsigned long page_faults = 0; /* page faults counter */

...

asmlinkage void do_page_fault(struct pt_regs *regs, unsigned long error_code)

{

...

tsk = current;

/* increment page fault counter */

page_faults++;

...

At this point the kernel is correctly counting every page

fault. However, the use of page_faults is limited

to the current module: fault.c. We now want to

make the counter accessible from everywhere in the kernel

using the extern directive. Add the code shown in bold

below to include/linux/mm.h:

/* include/linux/mm.h */

#ifndef _LINUX_MM_H

#define _LINUX_MM_H

...

#ifdef __KERNEL__

#include <linux/config.h>

#include <linux/string.h>

#include <linux/list.h>

#include <linux/mmzone.h>

#include <linux/swap.h>

#include <linux/rbtree.h>

/* Page Faults Counter (implemented in arch/i386/mm/fault.c) */

extern unsigned long page_faults;

...

Finally, we need one more small change to the kernel. Even

with the extern directive, the page_faults

counter is not accessible to a kernel module. This is because

our kernel modules are injected into the operating system at

run-time (dynamic linking), instead of being statically

linked to the kernel at compile-time.

In order to make our counter accessible to kernel modules and

device drivers, we need to export

page_faults using a special macro called

EXPORT_SYMBOL(name). The file kernel/ksyms.c contains a

list of all of the exported symbols in the kernel. Add the

line shown below to this file, possibly in the "internal

kernel memory management" section:

EXPORT_SYMBOL(page_faults);

At this point you are ready to recompile the new kernel with

"make clean dep bzImage".

|

Q7. Add a page fault counter to the kernel as

explained in this section, then recompile and install the

kernel. To test the new kernel, modify pageinfo-skel.c so

that it prints the number of page faults occurred since

system reset. |